Table of contents

At the beginning of the pandemic, I decided to build a custom keyboard (post on this later). The project led to me wanting to add a Blackberry trackball and this would require a special way to mount it to the keyboard PCB. After weeks of trying to jury-rigged a mount from common parts to attach the trackball to the keyboard, I realized that it would be much easier to design and 3D print the necessary part.

I also needed a better way to document my projects and starting a blog is better for visibility and accessibility than a markdown README file on Github. Ghost is a good candidate, offering a lightweight straightforward writing platform (with markdown support). There's also a robust list of third-party integrations which would be good down the road if I needed to scale.

I also wanted to be able to easily backup my data in case I needed to move to a different host. At first, I was eyeing an AWS S3 bucket or perhaps Linode's offering called "Object Storage." At the moment, I don't need that much backup space. A free option would be best. This is where Rclone is handy as it would allow me to tarball essential ghost files and have a daily backup. I could also deploy Rclone on another server, such as a local NAS, as an added backup destination.

I chose Terraform in order to automate the setup tasks so that I can easily switch hosts in the future. The one plus side with Terraform is that it uses declarative language to define tasks and this is, to me, a lot easier to read and understand in the future. If you haven't looked at your code in over six months, it may as well been written by someone else.

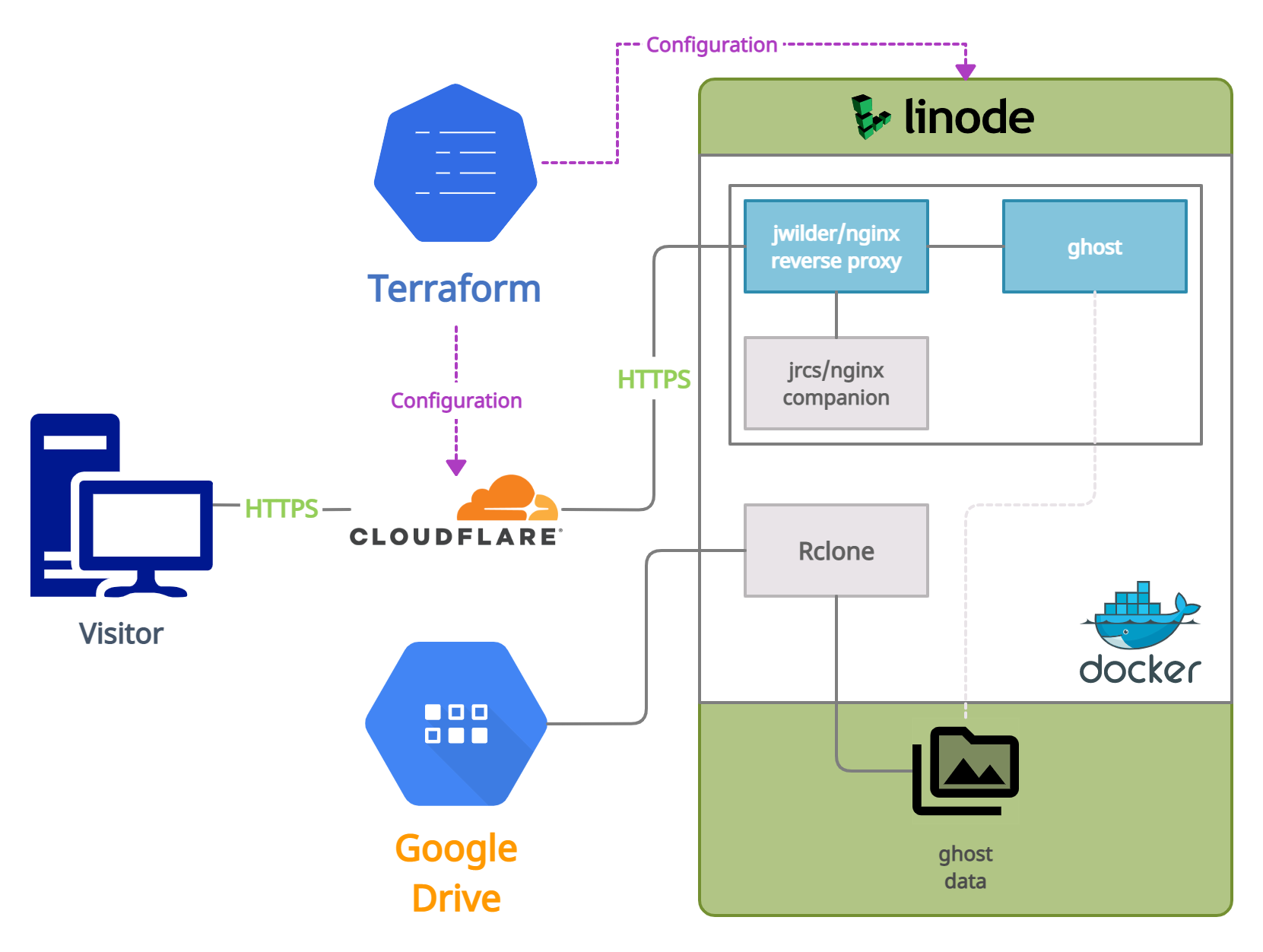

Project Overview

- Terraform deployment

- Cloudflare proxied DNS

- Docker

- Nginx reverse proxy

- Let's Encrypt for automatic SSL

- Ghost 4

- Rclone backup blog data to Google Drive

This project can be found on my github. Clone and follow along:

git clone https://github.com/foureight84/ghost-linode-terraform.git && cd ghost-linode-terraformTerraform

A declarative configuration-based infrastructure as code API wrapper. There are a few pros and cons to consider. Keep in mind that this is my first time using Terraform these are surface-level points observed.

Pros:

- Quick and straightforward (standardized) configuration syntax declaring what tasks to perform.

- Easy to read and double-check my work. Terraform's 'plan' feature to check for syntax errors and preview the final result.

- Configurations can be changed and applied quickly without having to go through service consoles.

- All pieces in my stack can be managed through Terraform (see cons).

Cons:

- Providers often offer web APIs to perform the same tasks, and at best, there will be feature parity. But there is a chance that feature-parity on Terraform is a second priority to a provider and support lags behind.

- Not all services may have Terraform support, which could lead to the additional complexity of having to manage multiple tools. This is often the case in a real-world scenario.

- Applying my Terraform configuration update doesn't always behave as expected. The functionality limitation depends greatly on the provider. For example, after a Linode is created, applying changes to certain properties such as root password will result in Terraform destroying the instance to create a new node using updated configurations.

Before diving into specific files in the project, here is a brief overview and explanation of the project structure:

[..root]

│ cloudflare.tf //resource module for CloudFlare rules

│ data.tf //templates ex. docker-compose.yaml, bash scripts, etc...

│ linode.tf //resource module for Linode instance setup

│ outputs.tf //handle specific data to display after terraforming

│ providers.tf //tokens, auth keys, etc required by service providers

│ terraform.tfvars.example //example answers for input prompts

│ variables.tf //defined inputs required for terraforming

│ versions.tf //declaration of providers and versions to use

│

└─[scripts]

├─[linode]

│ docker-compose.yaml //main stack. nginx proxy, letsencrypt, ghost

│ stackscript.sh //boot time script specific to Linode to setup env

│

└─[rclone]

│ backup.sh //cron script for backing up ghost blog directory

│ docker-compose.yaml //rclone docker application

│

└──[config]

rclone.conf.example //rclone configuration for cloud storageLinode

linodes.tf, stackscript.sh, data.tf

This is a straightforward configuration to create a Linode with the use of Linode's Stackscript. Which is essentially a run-once bash script that gets executed on the first boot.

The stackscript.sh lives in the ghost-linode-terraform/scripts/linode/ directory and is parsed by Terraform as a data template. Terraform's templating syntax needs to be taken into consideration when parsing text files. Most notable are variables and their escape characters. Any $string or ${string} notation will be regarded as a template variable, while $$string and $${string} are escaped bash variables. More about strings and templates.

script = "${data.template_file.stackscript.rendered}"Alongside the Stackscript, the "linode_instance" resource block also includes stackscript_data property. This is a way of providing data to the one-time boot script. The key-value assignments within this block correspond with the 'User-defined fields' at the top of stackscript.sh.

#!/bin/sh

# <UDF name="DOCKER_COMPOSE" label="Docker compose file" default="" />

# <UDF name="ENABLE_RCLONE" label="(Bool) Flag to turn on RClone Support" default="false" />

# <UDF name="RCLONE_DOCKER_COMPOSE" label="RClone docker compose file" default="" />

# <UDF name="RCLONE_CONFIG" label="RClone configuration file" default="" />

# <UDF name="BACKUP_SCRIPT" label="Backup script" default="" />stackscript_data = {

"DOCKER_COMPOSE" = "${data.template_file.docker_compose.rendered}"

"ENABLE_RCLONE" = var.enable_rclone

"RCLONE_DOCKER_COMPOSE" = "${data.template_file.rclone_docker_compose.rendered}"

"RCLONE_CONFIG" = "${data.template_file.rclone_config.rendered}"

"BACKUP_SCRIPT" = "${data.template_file.backup_script.rendered}"

}data "template_file" "docker_compose" {

template = "${file("${path.module}/scripts/linode/docker-compose.yaml")}"

vars = {

"ghost_blog_url" = "${var.ghost_blog_url}"

"letsencrypt_email" = "${var.letsencrypt_email}"

}

}In the snippet above, docker-compose.yaml for the Ghost stack is parsed as a data template and ghost_blog_url and letsencrypt_email variables get evaluated and then passed as a string to the Stackscript at runtime.

At the time of deployment, the Stackscript will be created before the Linode instance. Terraform allows direct referencing named values. This can be seen in the "linode_instance" block where linode_stackscript.ghost_deploy.id is assigned as the stackscript ID to include when creating the Linode instance. Finally, other parsed data templates such the docker-compose.yaml are assigned to stackscript user-defined fields and sent as stackscript_data.

Cloudflare

The Cloudflare configuration is relatively straightforward. Since the foureight84.com domain is already managed by Cloudflare, a lookup is performed and the named value is referenced with each resource call as zone_id property.

data "cloudflare_zones" "ghost_domain_zones" {

filter {

name = var.cloudflare_domain

status = "active"

}

}An 'A' record is created for the blog (blog.foureight84.com) and end-to-end HTTPS encryption is enforced (ssl="strict") along with requiring all HTTPS origin pull requests only come from Cloudflare. Nginx-proxy-companion will generate a validation file under the path blog.foureight84.com/.well-known/<some random string> for SSL certification requests. Let's Encrypt validation of this generated file accepts both HTTP and HTTPS (ports 80 and 443), a page rule is created to ensure that the request does not get blocked.

Variables and Definitions

As seen in linode.tf, cloudflare.tf, and especially data.tf, ${var.<some string>} are used throughout. These are references to declared variables in variables.tf such as API tokens for our services, domain names, and etc. These variables show up as input prompts when performing Terraform plan, apply, or destroy actions. Variables contain properties such as data type, descriptions, default values, and custom validation rules. More about input variables.

This Terraform deployment requires 17 data inputs in order to perform its tasks and that's 17 possible chances to introduce error. Luckily, Terraform supports dictionary referencing in the form of .tfvars files. Tfvars are key-value files where the key is the variable name. A .tfvars file is referenced during run-time in order to provide required inputs. For example:

terraform plan -var-file="defined.tfvars"Docker

This project has two separate docker-compose environments. The first is our blog stack, and the second is Rclone to perform data backup. I decided to separate the Rclone docker service from the primary Ghost stack for two reasons:

- I want to be able to mount the cloud storage prior to running the Ghost stack so that data restore can be performed should a backup exists (see

stackscript.sh). - Cloud drive mount should always stay active. Changes to my Ghost stack should not impact Rclone's availability.

Ghost Stack

This runs 3 containers using the following images:

- jwilder/nginx-proxy

- jrcs/letsencrypt-nginx-proxy-companion

- ghost:4-alpine

The default directory is /root/ghost. This can be changed via Terraform during deployment from the tfvars file. Since this is a set default value, Terraform does not prompt for the input value.

jwilder/nginx-proxy

This is the "front" facing container on the origin server (Linode) sitting behind Cloudflare. Visitors' incoming requests for https://blog.foureight84.com will go through Cloudflare, which is forwarded to our reverse-proxy, where the request is relayed to the running Docker service matching the VIRTUAL_HOST value. Finally, nginx-proxy will collect the served content from the Ghost service and send it back to the visitor.

The reverse proxy will be listening to exposed ports 80 and 443.

jrcs/letsencrypt-nginx-proxy-companion

Nginx-proxy-companion handles automatic SSL registration for the running Docker services. The important environment variables to keep in mind are:

environment:

- LETSENCRYPT_HOST=${ghost_blog_url}

- LETSENCRYPT_EMAIL=${letsencrypt_email}The above environment variables are added to Docker services that require SSL certificates (Ghost container in this example). In the future, if I need to add additional subdomains, such as www.foureight84.com, then that service will require LETSENCRYPT_HOST=www.foureight84.com. I can avoid having to repeatedly define LETSENCRYPT_EMAIL by setting the DEFAULT_EMAIL environment variable in the nginx-proxy-companion instead.

By default, a production certificate will be requested. Let's Encrypt has a limit of 10 cert requests every 7 days for normal users. All requested certs are stored in the path /etc/acme.sh which is mounted to the acme Docker volume.

The nginx-proxy and nginx-proxy-companion share certs, vhost.d, and nginx.html volumes. If I need to move to a different host, then the files store in certs and acme docker volumes will need to be backed up. The former is where the generated private key used for SSL certificate requests is stored, whereas the latter is contains the generated certificates and checked against upon service startup. Without the original private key then the SSL certificate cannot be renewed and without SSL certificate would force a new request.

Avoid performing a docker volume prune or docker system prune without proper backup. They are technically not essential unless the weekly certificate request limit has been reached. To get the path to these volumes, run the terminal command docker volume inspect <volume name>.

ghost:4-alpine

This is an all-in-one image. I believe prior versions used MySQL. With Ghost v4, SQLite is now the recommended DB. This works out better for my requirement as it is easier to backup.

ports:

- 127.0.0.1:8080:2368I am just remapping the default port 2358 to 8080. This will be internal and not required as the Ghost service will be not directly exposed to public traffic. As mentioned earlier this will be handled by the reverse proxy where incoming requests will be directed to the matching VIRTUAL_HOST.

Rclone

The default directory is /root/rclone but can be changed using Terraform tfvars at runtime.

Rclone is a command-line application that enables cloud storage to be mounted on the host filesystem. I believe it supports over 30 well-known services such as Google Drive, Dropbox, AWS S3, Amazon Drive, etc. I decided to stick with Google Drive since I have 100GB of underutilized storage. I plan on incorporating Rclone in a TrueNAS SCALE self-built NAS as a redundant backup in the future.

Here are the steps to setting up Google Drive with Rclone:

- Start with this: https://rclone.org/drive/#making-your-own-client-id

- Don't forget to submit app for verification. It will be an automatic approval. The generated auth token will not renew if the app is in development mode.

- Then follow this guide to attach the Google Drive account to Rclone: https://rclone.org/drive/

volumes:

- ${rclone_dir}/config:/config/rclone

- ${rclone_dir}/mount:/data:shared

- /etc/passwd:/etc/passwd:ro

- /etc/group:/etc/group:ro/etc/passwd and /etc/group mounts are required for FUSE to work properly inside the container. Additionally, a premade configuration from another Rclone instance is needed. This can be done by completing a Rclone setup wizard for Google Drive. Make sure to copy the configuration to the project's folder: ghost-linode-terraform/scripts/rclone/config/

In my setup, the Google Drive configuration is called 'gdrive.' This needs to reflect in the docker-compose's command block:

command: "mount gdrive: /data"The default mount path is /root/rclone/mount.

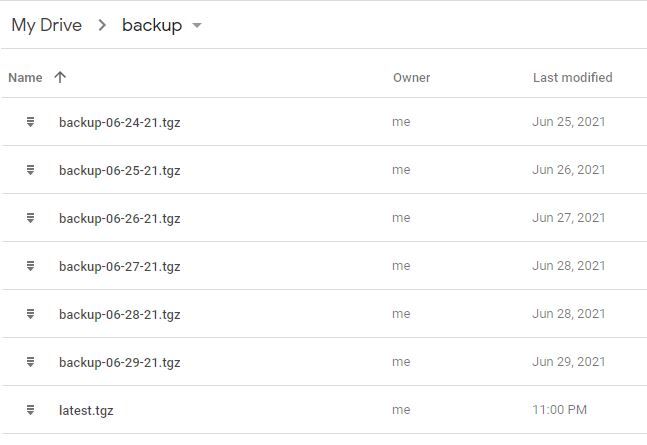

This script is responsible for creating tarballs from the ghost blog directory on the host machine. By default, the script maintains a rolling 7-day backup with latest.tgz being the last run archive.

A crontab is added through Linode's Stackscript and is set to run daily at 11PM (system time). Keep in mind that UTC is the default system time zone.

Deployment

If you have not done so, install Terraform CLI on your localhost. Follow this installation guide.

Clone project

git clone https://github.com/foureight84/ghost-linode-terraform.git && cd ghost-linode-terraformInitialize Terraform workspace

terraform initCreate your tfvars definition file

cp terraform.tfvars.example defined.tfvarsOpen defined.tfvars and fill in all required values

//project_dir = "" // default /root/ghost

//rclone_dir = "" // default /root/rclone

linode_api_token = ""

linode_label = ""

linode_image = "linode/ubuntu20.04" // see terraform linode provider documentation for these values

linode_region = "us-west" // see terraform linode provider documentation for these values

linode_type = "g6-nanode-1" // see terraform linode provider documentation for these values

linode_authorized_users = [""] // user profile created on linode with associated ssh pub key. https://cloud.linode.com/profile/keys

linode_group = "blog"

linode_tags = [ "ghost", "docker" ]

linode_root_password = ""

cloudflare_domain = "" // requires that your domain is already managed by cloudflare. value ex: foureight84.com

cloudflare_email = ""

cloudflare_api_key = "" // not to be mistaken with cf api token

letsencrypt_email = ""

ghost_blog_url = "" // ex. blog.foureight84.com

enable_rclone = // boolean (default false). change to true if using rclone. see README.md in rclone directory on how to setup config beforehandDouble-check that everything is correct and get a deployment preview

terraform plan -var-file="defined.tfvars"Apply Terraform changes to production

terraform apply -var-file="defined.tfvars"